Install Engines

Install Local Engines

Jan currently doesn't support installing a local engine yet.

Install Remote Engines

Step-by-step Guide

You can add any OpenAI API-compatible providers like OpenAI, Anthropic, or others. To add a new remote engine:

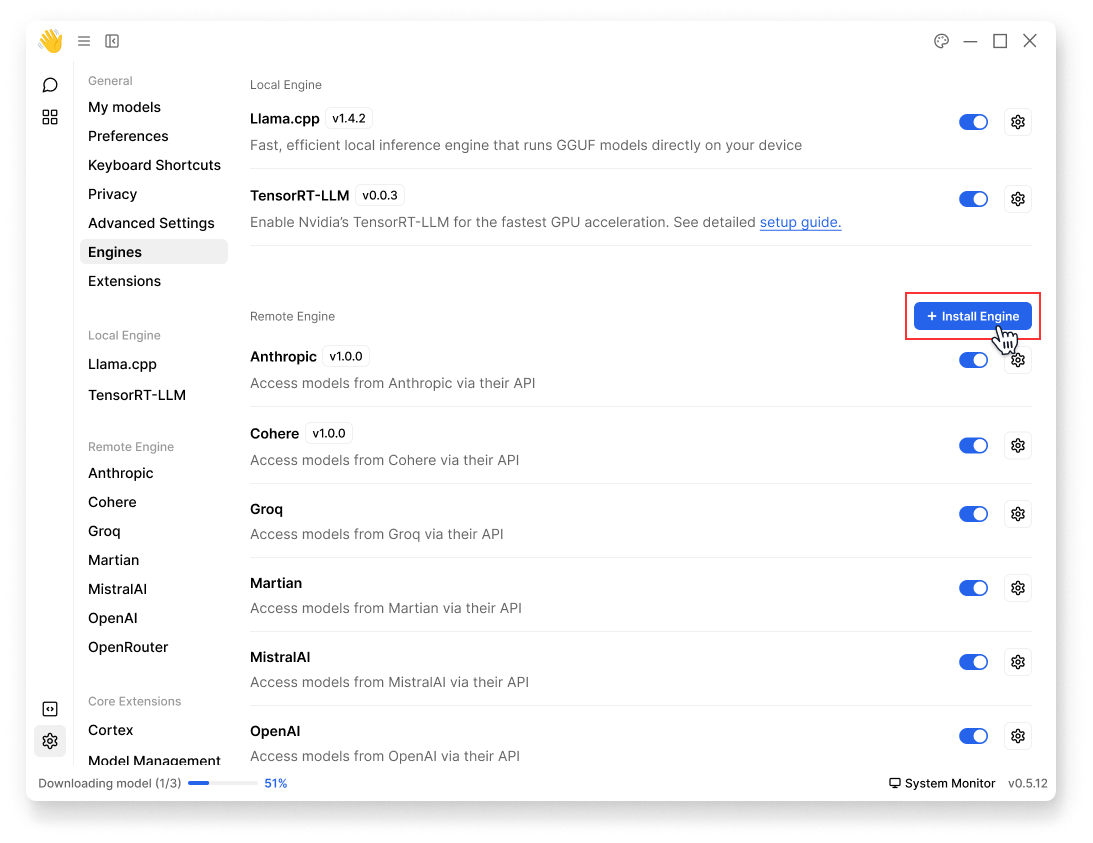

- Navigate to Settings () > Engines

- At Remote Engine category, click + Install Engine

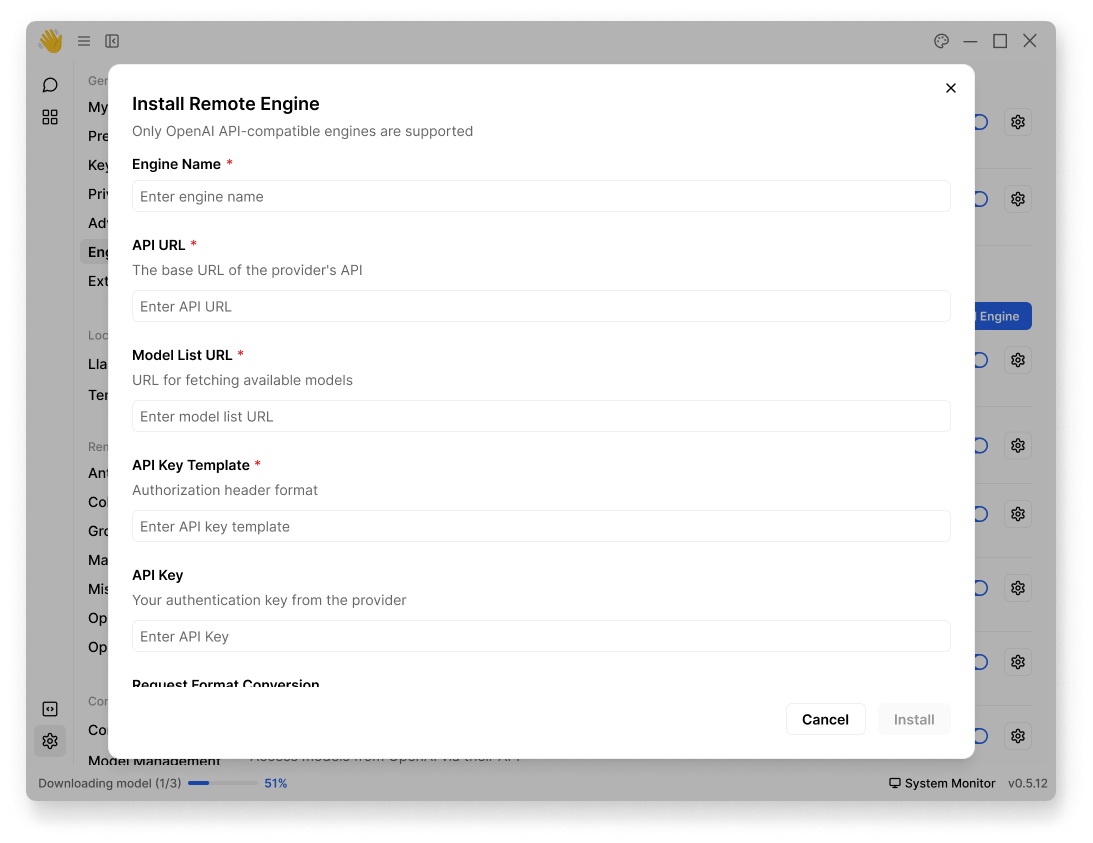

- Fill in the following required information:

| Field | Description | Required |

|---|---|---|

| Engine Name | Name for your engine (e.g., "OpenAI", "Claude") | ✓ |

| API URL | The base URL of the provider's API | ✓ |

| API Key | Your authentication key from the provider | ✓ |

| Model List URL | URL for fetching available models | |

| API Key Template | Custom authorization header format | |

| Request Format Conversion | Function to convert Jan's request format to provider's format | |

| Response Format Conversion | Function to convert provider's response format to Jan's format |

- The conversion functions are only needed for providers that don't follow the OpenAI API format. For OpenAI-compatible APIs, you can leave these empty.

- For OpenAI-compatible APIs like OpenAI, Anthropic, or Groq, you only need to fill in the required fields. Leave optional fields empty.

- Click Install

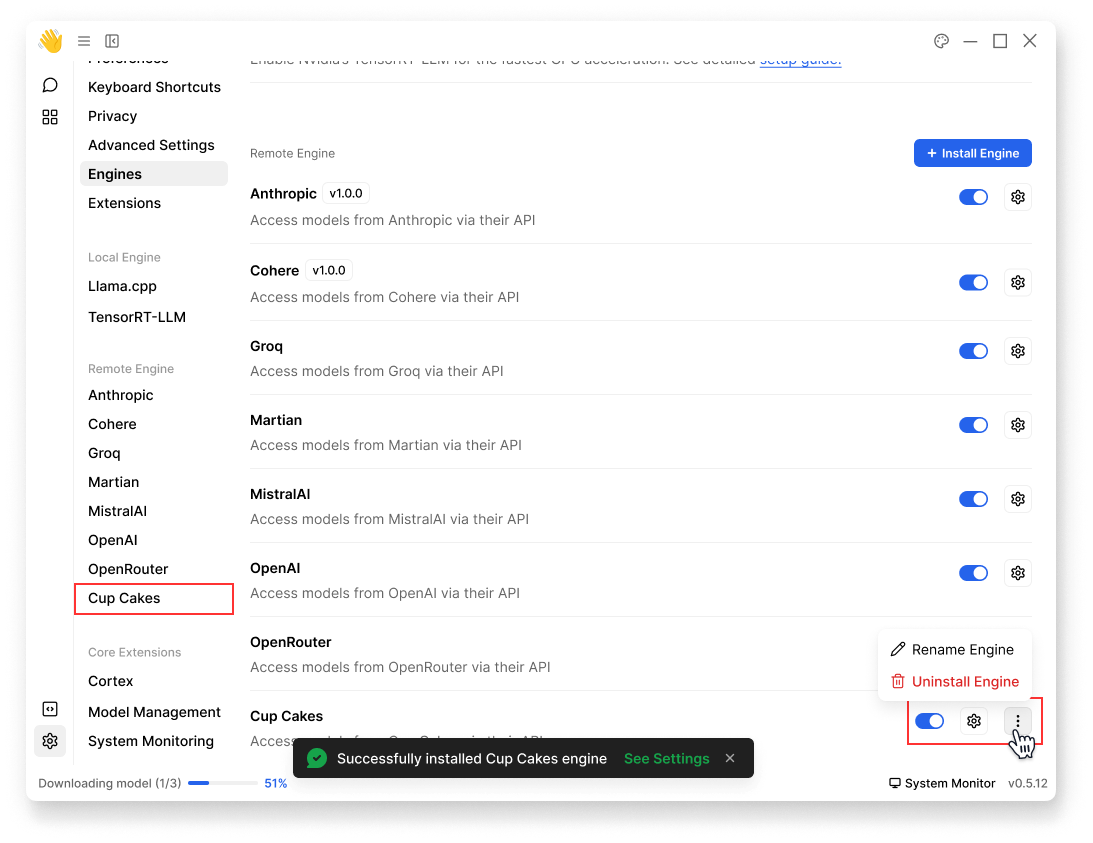

- Once completed, you should see your engine in Engines page:

- You can rename or uninstall your engine

- You can navigate to its own settings page

Examples

OpenAI-Compatible Setup

Here's how to set up OpenAI as a remote engine:

- Engine Name:

OpenAI - API URL:

https://api.openai.com - Model List URL:

https://api.openai.com/v1/models - API Key: Your OpenAI API key

- Leave other fields as default

Custom APIs Setup

If you're integrating an API that doesn't follow OpenAI's format, you'll need to use the conversion functions. Let's say you have a custom API with this format:

// Custom API Request Format{ "prompt": "What is AI?", "max_length": 100, "temperature": 0.7}// Custom API Response Format{ "generated_text": "AI is...", "tokens_used": 50, "status": "success"}

Here's how to set it up in Jan:

Engine Name: Custom LLMAPI URL: https://api.customllm.comAPI Key: your_api_key_here

Conversion Functions:

- Request: Convert from Jan's OpenAI-style format to your API's format

- Response: Convert from your API's format back to OpenAI-style format

- Request Format Conversion:

function convertRequest(janRequest) { return { prompt: janRequest.messages[janRequest.messages.length - 1].content, max_length: janRequest.max_tokens || 100, temperature: janRequest.temperature || 0.7 }}

- Response Format Conversion:

function convertResponse(apiResponse) { return { choices: [{ message: { role: "assistant", content: apiResponse.generated_text } }], usage: { total_tokens: apiResponse.tokens_used } }}

Expected Formats:

- Jan's Request Format

{ "messages": [ {"role": "user", "content": "What is AI?"} ], "max_tokens": 100, "temperature": 0.7}

- Jan's Expected Response Format

{ "choices": [{ "message": { "role": "assistant", "content": "AI is..." } }], "usage": { "total_tokens": 50 }}

⚠️

Make sure to test your conversion functions thoroughly. Incorrect conversions may cause errors or unexpected behavior.